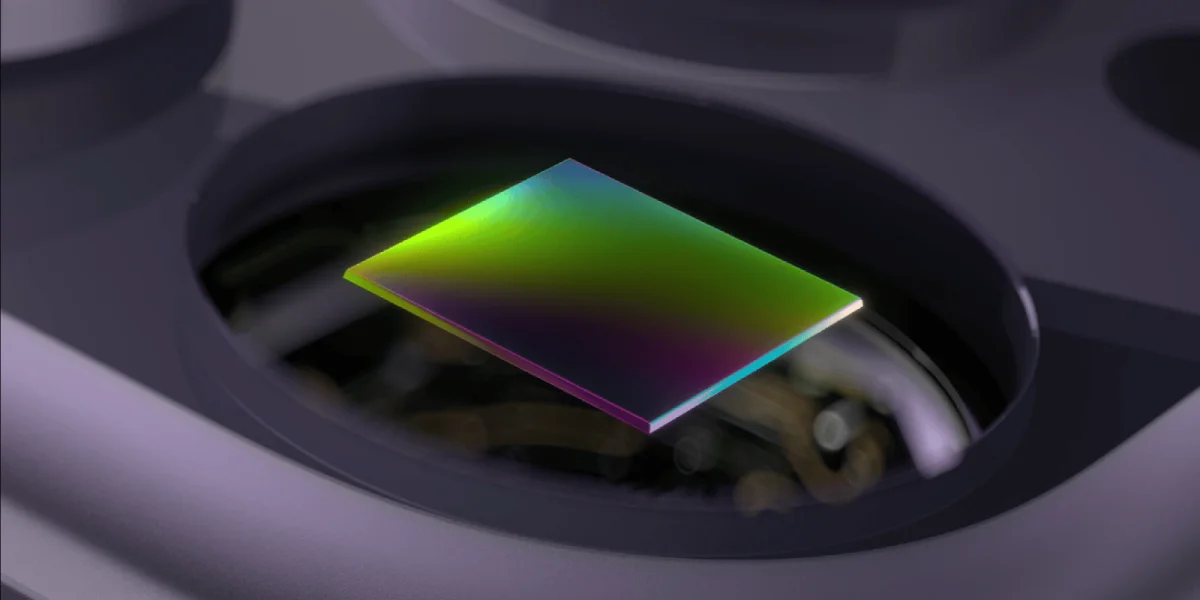

Researchers at Apple have introduced a novel AI model named DarkDiff that significantly enhances the quality of photos taken in extremely low-light conditions. This model integrates a diffusion-based image processing framework directly into the camera’s image signal processing pipeline, enabling it to recover details from sensor data that are typically lost in darkness.

Traditional methods often produce images with excessive grain or smoothness, where fine details are obscured. In contrast, the new model leverages pre-trained generative diffusion models to analyze and reconstruct dark areas of images more effectively. By applying this technique during image capture rather than post-processing, Apple aims to improve perceptual quality in challenging lighting scenarios.

Collaborating with Purdue University, the study titled "DarkDiff: Advancing Low-Light Raw Enhancement by Retasking Diffusion Models for Camera ISP" details how this approach outperforms existing methods across three low-light image benchmarks. The mechanism used in DarkDiff focuses on localized image patches, preserving essential structures and reducing common artifacts found in low-light photography.